SPECIAL REPORT: Cybersecurity

Our team's special coverage on Cybersecurity

Budget cut ‘catastrophic’ to cybersecurity operation, Biden officials say

The newly elected Speaker Mike Johnson (R-La.) voted in September for an unsuccessful amendment to cut CISA’s budget by 25%, which officials said would cripple existing cybersecurity programs.

read more

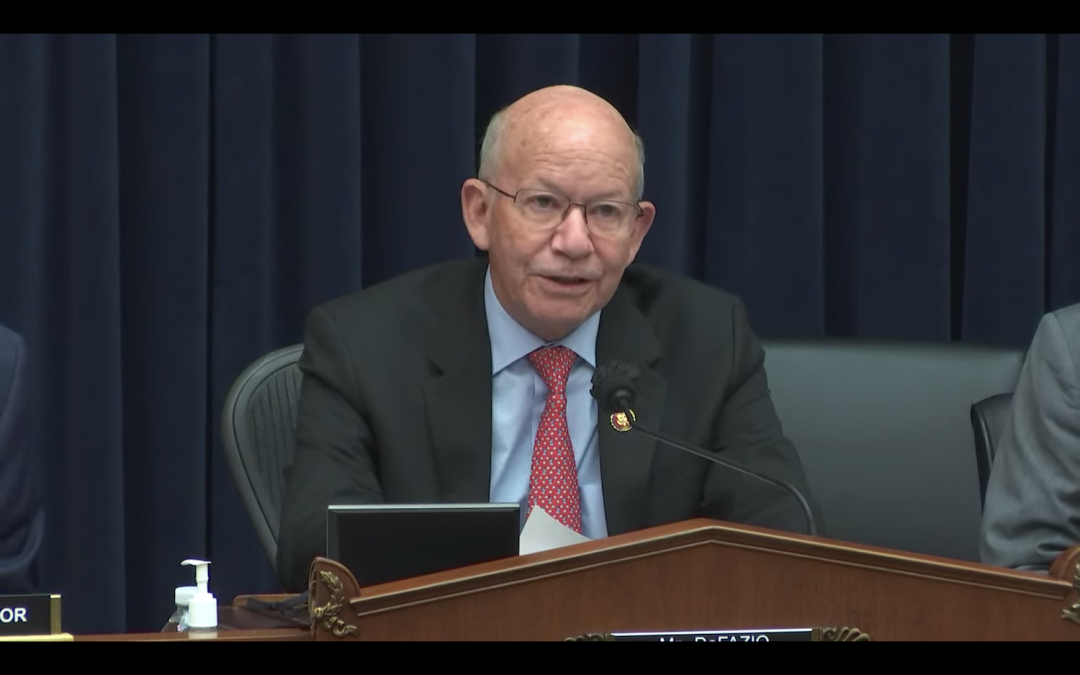

GOP leaders, critical infrastructure industry officials criticize TSA cybersecurity regulations

Common sense steps go a long way in strengthening cyber defense measures and mitigating the likelihood of successful attacks. But most companies don’t have these basic and affordable systems in place.

read more

Big tech companies need to be liable for illicit activity on their platforms, experts tell House committee

Most of the organized crime syndicates operate on the open web and tech companies should be liable for their activity on their websites.

read more

GAO report: Not clear if U.S. infrastructure protected from cyber threats

A report from the Government Accountability Office showed federal agencies had largely failed to collect data on cybersecurity improvements among the nation’s critical infrastructure.

read more

For Election Security, Experts Say Paper May Still Be The Answer

“A really serious attack on the integrity of the election, which [Russia] probably could accomplish if they wanted to, would require paper ballots to recover from,” he said. “If you can go back and you can actually physically count ballots, that’s a way to assure the American people that you will never be able to do digitally.”

read moreAs 2020 Looms, Election Security Experts Worry

WASHINGTON — Despite at least 21 states being the target of Russian hacking in the 2016 election, experts say that there is no evidence that a foreign power has interfered in the outcome of a U.S. election then or since. But election cybersecurity experts say 2020 is “the bigger prize” and not enough is being done to address the threat.

“[Russia is] going to strike when it’s in their interest to strike and unfortunately our technology is not yet there to stop them,” said University of Michigan computer science professor J. Alex Halderman. “I think 2020 is going to be the bigger prize.”

Halderman is one of the premiere election security experts in the U.S., a title that comes with a peculiar credential. In April, Halderman demonstrated the vulnerability of the U.S. election system by hacking a set of voting machines, which he then used to rig a mock election where students at the University of Michigan had to choose between their school and archrival Ohio State University.

Thanks to Halderman’s tampering, Ohio State won the vote — an occurrence that any Big 10 fan should know falls somewhere after pigs flying on a list of events ordered by probability. The experiment proved Halderman’s point, though. The U.S. election system, simply put, is severely — and in the eyes of election security experts, frighteningly — exposed to a cyberattack.

“We are still vulnerable,” said Richard Andres, a professor of national security strategy at the National War College. “The critical infrastructure is highly vulnerable and we should work hard to make it less vulnerable, but that’s going to be very tricky.”

That assertion by Andres and others is supported not only by nonpartisan groups like the Center for International and Strategic Studies and Harvard University’s Defending Digital Democracy Project, but by the U.S. intelligence community.

In the 2019 Worldwide Threat Assessment, an annual document produced by the Director of National Intelligence, U.S. intelligence agencies expressed not only that “our adversaries and strategic competitors probably already are looking to the 2020 U.S. elections as an opportunity to advance their interests,” but that U.S. adversaries are continuing to advance and refine their capability to influence U.S. elections.

Specifically, those adversaries include Russia, China and Iran. According to a CSIS survey from 2018, 81 percent of cybersecurity experts are most concerned about the threat coming from Russia, whose intelligence services are responsible for well-documented influence campaigns in both the 2016 and 2018 elections.

William Carter, deputy director for the CSIS technology policy program, says that he is very worried about the prospect of a cyberattack on the 2020 election, but it’s not vulnerable voting machines that keep him up at night.

To understand where the Russian threat is coming from, he says, “you have to understand the way that they think about attacking our elections.”

According to Carter, Russia wants to undermine confidence in American democracy and institutions. But, he adds, “they want to do so in a way that is below the threshold where we will retaliate in a meaningful way.”

As such, Carter’s concern isn’t the vote tally itself, but rather public confidence in the election results.

Andres, who is a senior fellow at the George Washington University Center for Cyber and Homeland Security in addition to his post at the National War College, worries about something similar. To him, a worst-case scenario cyberattack could see vote tallies manipulated so that they appear differently in different places in order to undermine the perceived integrity of election systems.

“All [the Russians] have to do is do it enough places … that no American was sure whether his or her vote had been accurately tallied,” he said. “The psychological ramifications of having everyone in the country worry that their vote was not accurately counted … it has the potential to paralyze the country for a fair period of time.”

While Carter and Andres have different visions of the potential threat, they share in the assertion that an attack, in some form, is highly likely. And they both say that not enough is being done to avert such an attack.

According to Andres, much of the problem stems from states such as Georgia being unwilling to accept help from the federal government. “States do not want the federal government messing with their voting machines,” he said.

However, Eric Rosenbach, director of the Harvard-affiliated Defending Digital Democracy Project, says that states are not equipped to go at it alone.

“[Russia] is a nation-state actor,” Rosenbach said in a committee hearing last month. “The states are not designed to have cybersecurity to defend against that threat.”

The Department of Homeland Security is offering a battery of free cybersecurity services to states upon request, including cybersecurity advisers, information sharing, incident response and cybersecurity assessments. The majority of states are taking advantage of these services — according to CSIS, all but nine states have installed the DHS’s Albert sensors, which monitor election systems for an intrusion. However, those nine states that haven’t installed the sensors represent weak links in the broader U.S. election system. According to Halderman, it may not matter which state is targeted by cyberattacks if that attack undermines confidence in the national outcome.

“Until we bring up the most weakly protected states to an adequate level of security,” he said, “the whole nation will be at risk.”

To do that, there is a laundry list of issues to address. The absence of any paper records in several states is one notable issue. Paul Rosenzweig, a senior fellow for national security and cybersecurity at the R Street Institute, says generating a paper trail needs to be a top priority. Beyond that, he says, more money is hugely important to improving cybersecurity in states, simply because modernizing voting machines is expensive.

According to Carter, that money likely isn’t coming.

“I just don’t see the states, or frankly the federal government in the current budget environment, ponying up the type of funding that would be necessary, he said. “The update to the [Help America Vote Act] funding was like $380 million, which is a hilariously small amount.”

More funding for states to improve their election security has been proposed in the past. In the previous Congress, Rep. Bennie Thompson, D-Miss., introduced the Election Security Act, which included grant funding for states and a mandate that they use paper ballots among other provisions, but it never received a vote.

Thompson’s bill was also incorporated into the For The People Act, H.R.1, in the 116th Congress. The omnibus bill passed the House 234-193 earlier this month, but Senate Majority Leader Mitch McConnell has said he will not bring it up in the Senate.

Thompson, who now chairs the House Committee on Homeland Security, said in a January press release that “[election security] should not be a partisan issue, but Congress has done far too little to prevent foreign election meddling after Russia interfered in the 2016 election.”

In any case, with H.R.1 at a dead end in the Senate, there is no new election security funding on the horizon.

And in 2020, with election security still underfunded unless a bipartisan agreement can be reached, Carter says that “[Russia’s] going to be coming at us with everything they’ve got.”

That isn’t to say that the U.S. is undefended. “There are a lot of very smart people spending a lot of time and money to make sure that our elections go off without a hitch and that the vote is accurately captured no matter who is trying to interfere with out elections,” Carter said. But still, he worries about whether public confidence in U.S. institutions can endure another assault by Russia.

“The biggest risk,” Rosenzweig said, “is that this helps to destroy America’s confidence in its accuracy, so that after the vote, whoever loses gets to say ‘the winner is illegitimate.’”

International Cooperation Necessary to Keep Japan Cybersecure During the 2020 Olympics, Say Experts

WASHINGTON—Hosting the 2020 summer Olympic games makes Tokyo a target for cyberattacks, and the best defense is international cooperation, experts said Tuesday.

At last year’s games in South Korea, cyberattacks downed hundreds of computers, taking the Internet and TV systems offline for half a day.

The Washington Post reported that U.S. intelligence had identified Russia as the culprit, though the attack was made to look like North Korea had carried it out. Russia was blocked from competing in South Korea due to doping violations.

“These kinds of international events are ripe for bad actors like Russia to carry out cyberattacks, whether they’re testing new capabilities or whether they’re angry they’re prevented from participating,” said Meg King, a strategic and national security adviser at the Woodrow Wilson Center, where the expert panel was convened.

With upcoming Olympics games in Beijing, Paris and Los Angeles, Keio University professor Motohiro Tsuchiya said that the international community needed to coordinate defense strategies. The Japan Computer Emergency Response Team Coordination Center, for example, is a member of the global Forum of Incident Response and Security Teams, which helps resolve cyberattacks.

Motohiro Tsuchiya, professor of Media and Governance at Keio University, said that Olympic host countries are vulnerable to blackouts and hacks to transportation, the Internet, TV event streams and to personal information on hotel and ticket booking sites.

Japan is directly testing its own population for weaknesses.

On Feb. 20, the government-backed National Institute of Information and Communications Technology began hacking over 200 million IP addresses connected to Japan, searching for poorly secured devices with common username and password combinations. Japan’s constitution prohibits tapping into personal communications, but in late January, the government approved an amendment allowing it to hack certain civilian devices.

The initiative eschews complex smartphones and instead tests the security of routers, webcams and other internet-enabled objects like smart home appliances. This network of newly connected devices is called the “Internet of things,” and it’s highly insecure.

Major Internet of things attacks in 2016 led to the outages of popular websites like Twitter, Spotify and Netflix.

Tsuchiya said that while Japan’s actions are a “violation of secrecy of communication,” and many people are opposed to the policy, “we want to have a better, cleaner cyberspace.”

Some doubt the country’s leadership, however. Last November, Japan’s recentl -appointed cybersecurity and Olympics minister told the parliament he’d never used a computer before.

But i the International Olympic Committee controls systems in Olympic stadiums, not host countries. The committee created a commission to address cybersecurity after a confidential document relating to the Russian doping investigation was hacked. Japan will administer aspects such as ticketing, the press center and visitor Wi-Fi.

The country’s efforts to control what it can are part of its recognition of the long-term need for cybersecurity.

“The Olympic games [are] a good target, but [they’re] not an ultimate target,” said Tsuchiya. “We have to survive those Olympic games, to live after that. We have to prepare for the future.”

Inside the government’s war against deepfakes

WASHINGTON — Adult film star Raquel Roper was watching a trailer for popular Youtuber Shane Dawson’s new series focusing on the video manipulation trend known as deepfakes. Near the end of the video, she noticed a clip taken from one of her films — but it wasn’t her face depicted onscreen. It was Selena Gomez’s.

Roper was shocked. “You’re taking … the artist’s work, and you are turning it into something that I didn’t want it to be. Selena Gomez has not given consent to have her face morphed into an adult video,” she said in a response video.

“There is no way for me to take legal action against this, and I think that’s what the scariest part of it all is.”

That may soon change. Members of Congress, government officials and researchers are in what some call an “arms race” against deepfakes and their creators, which could lead to legislation against this emerging technology.

The term “deepfakes” refers to videos that have used artificial intelligence techniques to combine and superimpose multiple images or videos onto source material, which can be make it look as if people did or said things they did not. The most widely reported instances of deepfakes include celebrity pornography videos like Roper’s or video manipulations of politicians.

Some, however, believe deepfakes are not as dangerous as reported. The Verge argued deepfake hoaxes haven’t yet materialized, even though the technology is widely available.

In December, Sen. Ben Sasse, R-Neb., introduced a bill criminalizing the creation and distribution of harmful deepfakes — the first federal legislation of its kind. The bill died at the end of the 115th Congress on Jan. 3, but Sasse’s office said he plans to introduce it again in the current session of Congress.

“Washington isn’t paying nearly enough attention…” Sasse said. “To be clear, we’re not talking about a kid making photoshops in his dorm room. We’re talking about targeting the kind of criminal activity that leads to violence or disrupts elections. We have to get serious about these threats.”

Bobby Chesney, director of the Robert S. Strauss Center for International Security and Law at the University of Texas School of Law, briefed House Intelligence Committee Chairman Adam Schiff on the issue. Chesney said the legal solution to the proliferation of harmful deepfakes would not be a complete ban on the technology, which he said would be unethical and nearly impossible to enforce. What is feasible, he said, would be a federal law that would further penalize state crimes many deepfake creators are already committing — whether it’s fraud, intentional infliction of emotional distress, theft of likeness or similar statutes.

Chesney said Sasse’s bill seems to have that purpose, but the problem will most likely not be solved with one piece of legislation.

Government agencies have also begun fighting the falsified videos through research into detection and protection against malicious deepfakes. The Defense Advanced Research Projects Agency began researching methods to counteract deepfakes in 2016 through its Media Forensics program, which has focused on creating technical solutions such as automatically detecting manipulations and providing information on the integrity of visual media.

The program manager, Dr. Matt Turek, said the goal is to create an automated system across the internet that would provide a truth measure of images and videos.

To do so, Turek said, researchers look at digital, physical and semantic indicators in the deepfake. Inconsistencies in pixel levels, blurred edges, shadows, reflections and even weather reports are used to detect the presence of a manipulation.

One of the researchers Siwei Lyu, an associate professor of computer science at the University of Albany, and his team have been looking into detection of deepfakes by exploring two signals he said the fakes often possess — unrealistic blinking and facial movement.

Lyu’s team is also attempting to stop the creation of deepfakes by inserting “noise” into photos or videos that would stop them from being used in automated deepfake software. This “noise,” he said, would most likely be added numbers or pixels inserted into the image, and would be imperceptible to the human eye.

An early prototype will be available on ArXiv, an electronic repository of scientific papers, by early March, he said. The tool could be used as an add-on plugin for users before they upload an image or video online, he said, or could be an add-in used by platforms like Instagram or Youtube to protect images and videos already uploaded by users.

Especially with Russian involvement in the 2016 election, Lyu said, protections are needed against malicious false videos affecting politics.

“So far, deepfake videos have been generated by individuals — a bigger organization hasn’t done it,” he said. “If there is sponsoring of this activity I think they will actually cause a lot of problems.”

Turek said the research project is expected to wrap up in 2020.

However many deepfake creators and tech experts are worried about overregulating the technology. Alan Zucconi, a London-based programmer, is a creator of deepfake tutorials posted on his website and Patreon. They teach people about deepfakes’ potential positive applications, he said, such as historical re-enactments, more realistic dubbing for foreign language films, digitally recreating an amputee’s limb or allowing transgender people to see themselves as a different gender.

Zucconi said the definition of a deepfake given in Sasse’s bill is wrong. The bill defines it as “an audiovisual record created or altered in a manner that the record would falsely appear to a reasonable observer to be an authentic record of the actual speech or conduct of an individual.” But Zucconi said a deepfake is actually a specific product created by a process called deep learning that concerns artificial intelligence.

The solution to malicious deepfakes, he said, is education, which he said should include the topic of consent. Victims of deepfakes typically do not consent to their likenesses being used, he said, and women are disproportionately targeted. Zucconi said this points to a larger issue.

“Deepfakes are not the problem,” he said. “Deepfakes are the manifestation of something much more complex that we as a society need to address.”

Another creator of deepfakes, Youtube personality “derpfakes,” agreed, saying education would be a more effective solution than regulation. Creators of malicious deepfakes would “likely not be dissuaded by such things” as legislation, he said.

Derpfakes has focused on creating satirical deepfakes, such as inserting the actor Nicolas Cage into various pieces of pop culture. So far, derpfakes has inserted Cage into movie series like The Terminator, James Bond, Indiana Jones and Star Trek.

“My main goal with my deepfakes is to bring a smile to some faces and to show the world that deepfakes are not inherently a bad thing,” derpfakes said.

Raquel Roper, however, hopes for a law to prevent the spread of malicious deepfakes like her own stolen video.

“I feel so shocked that this is legal,” she said. “…the original video is a video that I charge people money for…. It is my product. You just need to be more aware that what you may think is just a joke or a fun little project for you, it can really affect people.”

Pentagon OFFICIALS TOUT ADVANCES IN AI, STRESS NEED FOR MORE DEVELOPMENT

WASHINGTON – Developing more complex artificial intelligence is necessary for the United States to keep pace with Russia and China, top defense technology officials said Tuesday.

Leaders from several Defense Department technology agencies told senators at an armed services subcommittee hearing that efforts are underway to create more advanced AI systems that can learn and use reasoning.

The Defense Advanced Research Projects Agency “research efforts will forge new theories and methods that will make it possible for machines to adapt contextually to changing situations,” said DARPA Deputy Director Peter Highnam.

Lt. Gen. John Shanahan, director of the Defense Department’s new Joint Artificial Intelligence Center, called for a greater sense of urgency in developing technology.

“The speed and scale of technological change required is daunting,” he said. “Ultimately, this [sense of urgency] needs to extend across our entire department, government and society.”