WASHINGTON – Testimony from a Meta whistleblower on the company’s reluctance to act on online safety and privacy led to a rare bipartisan push for legislation to protect children’s online safety and privacy Tuesday.

“Meta knows the harm kids experience on their platform, and the executives know that their measures fail to address it,” said Arturo Béjar. “There are actionable steps that Meta can take to address the problem, and … they are deciding time to time again to not tackle this issue.”

Béjar, a former Facebook engineering director and consultant, worked with Instagram’s Well-Being Team on a new questionnaire called BEEF, or Bad Emotional Experience Feedback, that asked users directly about their negative experience on the platform. The questionnaire result suggested that users report negative experiences at a much higher rate than the statistics Meta publicized.

For example, among users 16 years old and younger, 26 percent remembered having a negative experience in the past week due to witnessing hostility directed at someone based on their race, religion or identity, and 13 percent reported experiencing unwanted sexual advances in the previous week, Béjar said during his testimony Tuesday.

But when he brought those results to Meta executives, no action was taken, Béjar said. The company allowed him to publish internally a memo on his team’s findings, but he could not include any data from the questionnaire.

Since Béjar left Meta in October 2021, the company has halted the BEEF questionnaire and laid off most of the people who worked on the project, according to The Wall Street Journal.

Meta’s content moderation and online safety efforts were already under-resourced during his time at Meta, Béjar said, and the layoffs further suggest that the company is unwilling to directly tackle the problems Facebook and Instagram users face, or invest in solving them.

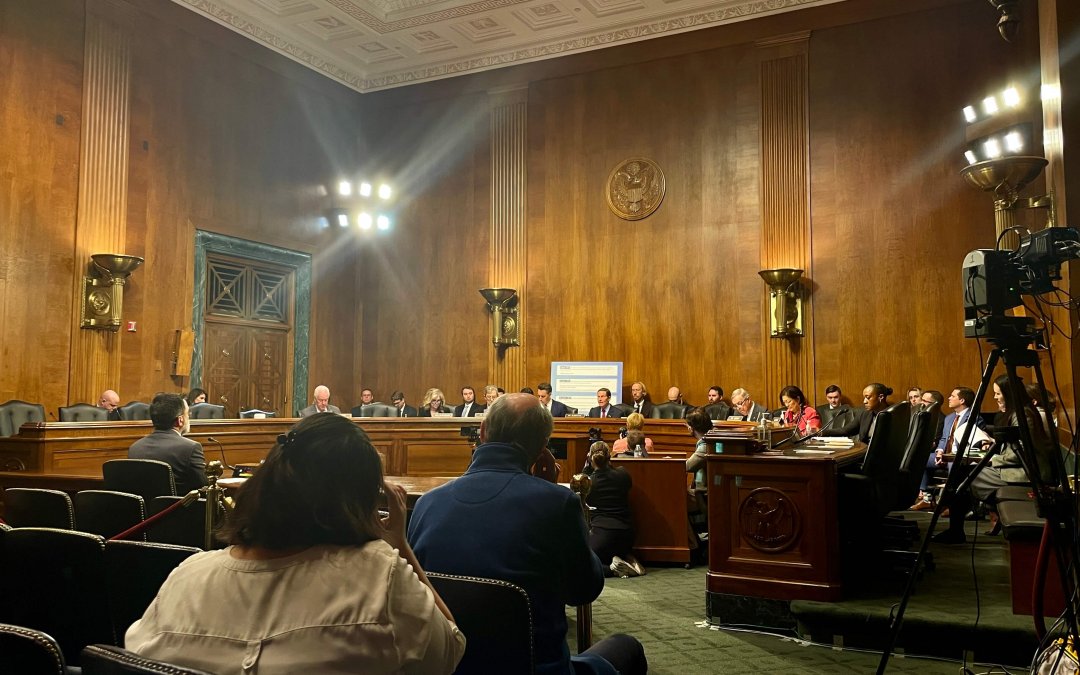

For more than two hours, senators questioned Béjar about a wide range of issues on social media, from the promotion of content about harmful body image and identity-based harassment to drug sales and child pornography, and how Congress could address them.

Béjar stressed that the basis for solving all of these problems is transparency. Meta needs to publicly disclose its internal statistics on online safety, like the data he brought to light, and about its content recommendation algorithm that actively pushes harmful content to users.

Congress also needs to pass legislation that focuses on influencing how social media platforms are designed and not on censoring content, he added.

Though the senators addressed different topics, the panel was in consensus that Congress needs to act on social media relegation. Sen. Dick Durbin (D-Ill.), the chair of the Judiciary Committee, said Congress urgently needs and is ready to rein in Big Tech. Ranking member Sen. Lindsey Graham (R-S.C.) said there needs to be protection for children online because “a society that cannot take care of his children or refuses to has a bleak future.”

Durbin and Graham also joined Sen. Josh Hawley (R-Mo.) in advocating for legislation ending Section 230, a provision in the Communications Decency Act of 1996 that makes “interactive computer service” providers, like social media companies, not liable for third-party content on those providers’ platforms.

“At the end of the day, if you want to incentivize changes to these companies, you’ve got to allow people to sue them. You’ve got to open up the courtroom doors,” Hawley said.

Béjar was not the first Meta employee to come forward with allegations of the company’s harmful conduct. In 2021, Frances Haugen, a former engineer and product manager, testified before the Senate Commerce Committee that Meta knowingly covered up how its products harm their users’ mental health and boost misinformation.

But Béjar’s testimony came at a critical time. A coalition of 42 state attorneys general filed a lawsuit in October against Meta, alleging the company deployed addictive features to hook children to Facebook and Instagram and exploited their personal information in violation of federal policy and consumer protection laws. In his interview with The Wall Street Journal, Béjar said he consulted the coalition of state attorneys generals who filed the lawsuit.

Several pieces of legislation on children’s online safety and privacy legislation also have already entered the legislative pipeline this term. An update to the Children and Teens’ Online Privacy Protection Act of 1998, spearheaded by Sens. Edward Markey (D-Mass.) and Bill Cassidy (R-La.), will ban targeted advertising to children. It also prohibits social media companies from collecting personal information from users under the age of 16 without their consent, raised from the previous limit of 13 years old.

Sens. Richard Blumenthal (D-Conn.) and Marsha Blackburn (R-Tenn.), who both sit on the Judiciary subcommittee, have also reintroduced in May the more ambitious Kids Online Safety Act. The bill would make social media platforms responsible for preventing and mitigating harm to users who are minors and require them to undergo annual independent audits on compliance. Both bills advanced out of the Senate Commerce Committee in July.

Speaking with reporters ahead of the hearing, Blumenthal said KOSA already has more than 40 co-sponsors. He said he hopes the bill will go to the chamber floor this year or early next year. And during the hearing, he joined Hawley’s pledge to seek a vote on the bill before the end of the year.

“It’s big tobacco all over again … and Congress needs to act,” Blumenthal said. “We can no longer rely on social media’s mantra: ‘trust us.’”