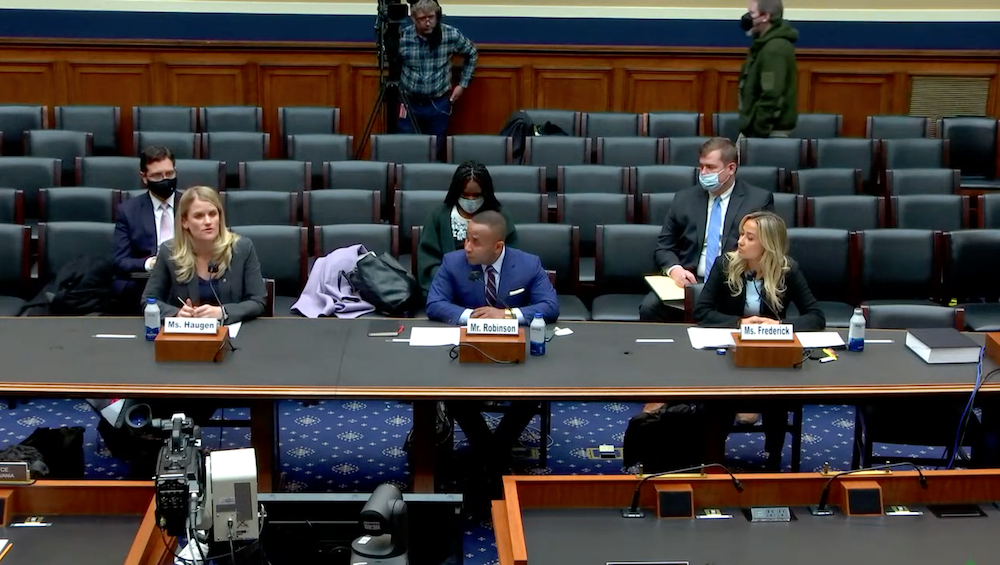

WASHINGTON — Facebook whistleblower Frances Haugen testified before Congress for the second time on Wednesday, warning lawmakers that getting caught up in partisan battles over legislation to hold digital platforms accountable for causing harm to users is just what Big Tech is hoping for.

The hearing is the first of two this month aimed at holding Big Tech accountable for its content, for which there appears to be bipartisan support. But there is little consensus on how to do it.

Haugen warned members of a House technology subcommittee that Facebook, which recently changed its corporate name to Meta, wants them to “get caught up in a long, drawn-out debate over the minutiae of different legislative approaches.”

“Please don’t fall into that trap. Time is of the essence,” she said. Haugen disclosed thousands of pages of internal documents to the government revealing Facebook’s negative effects on users.

But during a hearing of the House Energy and Commerce’s Subcommittee on Communications and Technology, lawmakers spent about six hours debating a variety of ideas on how to legislatively address issues ranging from misinformation, discrimination, hate speech, censorship and the harmful effects of social media on youth mental health.

The bills discussed at the hearing — all introduced by Democratic House members — would amend Section 230 of the Communications Decency Act of 1934, which allows digital platforms to host and moderate their users’ content without the threat of lawsuits.

The broad goal of reforming the courts’ interpretation of Section 230 to hold platforms accountable for the content they distribute has bipartisan support. But lawmakers differ on the how to achieve that goal.

Committee Chair Frank Pallone, D-N.J., expressed disappointment that his Republican colleagues did not include drafts of their legislation for consideration at Wednesday’s hearing.

“In order to actually pass legislation that will begin to hold these platforms accountable, we must work together, and I urge my colleagues not to close the door on bipartisanship for an issue that is so critical,” Pallone said.

The top Republican on the committee, Rep. Cathy McMorris Rodgers from Washington, said tech companies are censoring political speech, especially conservative voices, on their platforms that should be constitutionally protected under the First Amendment.

Rodgers said she is working on legislation that would remove protections for companies when they take down constitutionally protected speech.

But Rashad Robinson, president of the racial justice organization Color of Change, said freedom of speech does not mean freedom from the consequences of speech, especially if the information is untrue or harmful.

Facebook redesigned its algorithm in 2018 to prioritize content that is most likely to be shared by others, Haugen said. While Facebook said the purpose was to prioritize “meaningful social interactions,” she said the platform’s algorithm ends up prioritizing the most provocative content that will get the most clicks and shares — often including hate speech and misinformation.

Robinson said that Facebook’s algorithm disproportionately harms people of color by using personal information in targeting ads that limit information about voting, housing and job opportunities. He also said the algorithm subjects people of color to larger amounts of misinformation than white users.

Two bills discussed during the hearing, the Protecting Americans from Dangerous Algorithms Act and the Justice Against Malicious Algorithms Act of 2021, would allow companies to be held liable when they use personalized algorithms to recommend harmful content. A third, the Civil Rights Modernization Act of 2021, would clarify that platforms are not shielded from lawsuits for civil rights violations from targeted advertising.

Robinson called on Congress in his written testimony to take antitrust action against Big Tech companies and work toward ending their concentration of power and suppression of competition in the industry.

He applauded the committee’s efforts to include funding in President Joe Biden’s Build Back Better Act to create a Privacy Bureau at the Federal Trade Commission and to fund the FTC’s antitrust fights.

Scott Wallsten, president and senior fellow of the Technology Policy Institute, said in an interview that people view Facebook as having such a large share of the market that there are overlapping motivations between Section 230 reform and antitrust reform. Still, he said the two issues should be addressed separately.

At a panel event hosted by the conservative American Enterprise Institute on Tuesday, Wallsten joined several other economists and lawyers in discussing how Congress can act more constructively on antitrust — including several pieces of legislation in both the House and Senate.

Though the panelists had varying levels of support for the bills, they agreed that more funding for government agencies would create more effective antitrust efforts. In addition to funds allocated in the Build Back Better Act, the Merger Filing Fee Modernization Act would authorize $252 million for the Antitrust Division of the Department of Justice and $418 million for the FTC.

Wallston said that agreeing on reforms to Section 230 is difficult because platforms can never make all of their users happy when they moderate content on a website.

“There will always be people who want things down (on a site) that stay up and other people who want things that come down,” he said. “And because we have so many different preferences, there’s just not going to be stable equilibrium.”