WASHINGTON – Journalism executives called on legislators Wednesday to require generative artificial intelligence developers to license and pay for news content they are using to train their software.

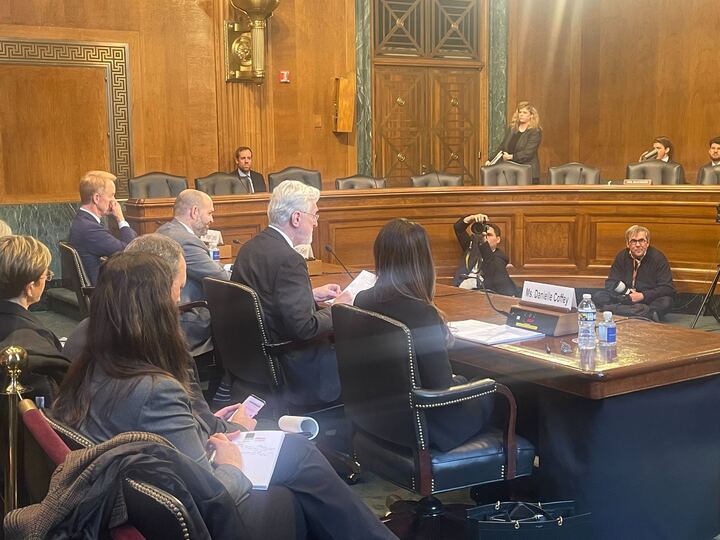

The use of AI to spread misinformation has been an increasing worry by industry and lawmakers. In addition, journalism organizations are pressing for compensation for use of their work, as declining revenue in the news industry continues to lead to widespread closures and layoffs. Both issues were closely examined during a hearing Wednesday by the Senate Judiciary Subcommittee on Privacy, Technology and the Law.

“Local reporting is the lifeblood of our democracy, and it is in crisis,” said Sen. Richard Blumenthal (D-Conn.), the subcommittee’s chair. “It is a perfect storm, the result of increasing costs, declining revenue and exploding disinformation.”

So far, AI companies have claimed that they don’t need to compensate news outlets under “fair use,” the doctrine that says copyrighted material can be directly quoted for limited or transformative use. This is prompting industry executives to call on the subcommittee to clarify current copyright laws.

Because the platforms use information from news sites to compete with them directly, fair use does not apply, said Danielle Coffey, president and CEO of the News/Media Alliance, which represents over 2,000 publishers.

“Although local news outlets are fighting for their lives, people are interested in the news more than ever,” Coffey said. While revenue declined 56% in the last 10 years, traffic to top news sites increased 43%, she explained to lawmakers.

To build AI software such as ChatGPT, developers use text from many millions of websites so the technology can analyze what words are commonly found together to write its own coherent sentences. Google’s C4 data set, used for AI models including Google’s T5 and Facebook’s LLaMa, includes 15 million websites. Coffey pointed to a Washington Post investigation which found that of the top ten most-used websites in the C4 data set, half were news sites.

When ChatGPT was originally released, it was only trained on information up to September 2021. Now, both ChatGPT and Meta AI have real-time access to the internet using Bing search, making them formidable news providers. Companies like Google or Meta can have AI programs summarize or even copy online articles for search users.

This “ingesting and regurgitating” means news providers lose out on web traffic, said Curtis LeGeyt, president and CEO of the National Association of Broadcasters.

These tools not only directly rob news sites of revenue, but make it impossible for outlets to understand their audiences and how to best serve them, said Roger Lynch, CEO of Condé Nast, which owns magazines like The New Yorker and Vogue.

“They are directly threatening the viability of the media ecosystem,” Lynch said.

The technology’s ability to produce misinformation, intentionally or otherwise, is also making trustworthy reporting even more difficult and valuable. The realism of deepfakes – audio, photo or video manipulated to mimic someone else – means broadcasters need to invest even more time and money into verifying the images they see, LeGeyt said.

Journalists are also targets of deepfakes themselves, sowing distrust even in established local news sources. LeGeyt referenced a recent video clip of two broadcast TV anchors that was manipulated to create an antisemitic rant.

For most digital publishers, more than half of traffic originates from search platforms, Lynch said. News sites operators said they have no bargaining power with the platforms, as opting out of inclusion in their AI training data sets means losing that traffic.

Some news organizations, including The Associated Press and Axel Springer, owner of Politico and Business Insider, have struck deals allowing OpenAI to train ChatGPT on their articles. In return, the publishers can use OpenAI technology. The AI organization also committed $5 million in funding for the American Journalism Project to help local outlets experiment with AI technology.

By contrast, The New York Times filed a lawsuit against OpenAI and Microsoft in December, raising similar concerns about copyright. OpenAI’s “unlawful use of The Times’ work to create artificial intelligence products that compete with it threatens The Times’ ability to provide that service,” the complaint states.

Most news outlets do not have the resources to hold OpenAI accountable in court and may be more in need of industrywide standards for licensing agreements, Blumenthal said.

Tech companies should expect to license news stories, like music publishers license songs, LeGeyt said.

“Local television broadcasters have done literally thousands of deals with cable and satellite systems across the country for the distribution of their programs,” he said. “The notion that the tech industry is saying that it’s too complicated to license from such a diverse array of content owners just doesn’t stand up to me.”

Some expect copyright law to shape which countries attract AI development in the future, Politico reported. For instance, AI startups may flock to Japan, where current laws do not generally require licenses or permission to use training data. It could take up to a decade for a definite answer in the U.S.

Witnesses agreed that broad legislation is not yet needed. The copyright clarification, however, may be sufficient for AI companies to begin compensating them appropriately for the use of their work. But if not, legislators should be ready to act, Coffey said.

“Unless some marketplace corrections are immediately seen, we call on Congress to step in and investigate these practices,” she said.